About

Almost everyone in the LLM space would have heard about MCP (Model Context Protocol).

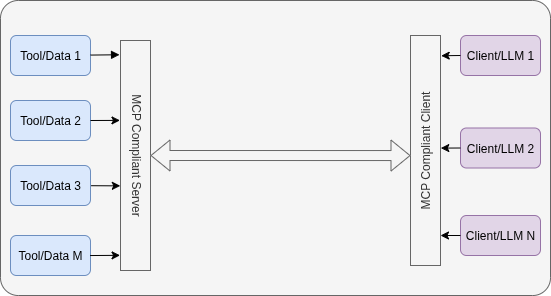

For those unfamiliar, MCP (Model Context Protocol), introduced by Anthropic, is a standard enabling businesses to expose their APIs such that MCP-compliant clients can seamlessly interact with them. Imagine chatting directly with your services via Claude Desktop without custom integrations for every LLM.

At Agent Boutique AI, as we’re experimenting on bringing chat based experience for our customer’s B2B APIs, we think we owe it to the community to share our thoughts on more than just what MCP is.

Past (Recap)

Before MCP, let’s say you’re building an LLM application for your product platform and if you want to do it in an LLM-agnostic way.

Say you have around M functions/tools in your platform that are to be available for “N LLMs” (OpenAI, Claude, Gemini, etc). You would have to manage MxN implementations (i.e., you have to implement support for calling each function/tool for each LLM)

Present

As of June 2025, MCP has rapidly become the de facto standard for LLM integration, significantly simplifying the aforementioned scenario.

Consider the same example where we discussed that you must do MxN implementations to integrate M tools for N LLMs.

With MCP, here’s what you do:

-

Develop all your M tools exposed as part of one or more MCP servers. (M implementations)

-

Develop your client to ensure that you have integrations with all the LLMs and make it MCP compliant. (N implementations)

Boom! Now you have integrated with ALL the LLMs that your client supports.

Not so best case

While MCP significantly reduces integration complexity, it’s not a silver bullet. The reality involves several ongoing challenges and trade-offs that early adopters are discovering.

Performance Considerations

The additional abstraction layer introduces latency that can impact real-time applications. Each MCP call adds overhead compared to direct API calls, which matters for latency-sensitive use cases.

Learning Curve

Despite simplification, MCP requires developers to understand:

- The MCP protocol specification

- How to properly structure tools and resources

- Best practices for error handling and state management

- Security implications of exposing APIs through MCP

Potential Limitations/Problems

Technical Limitations

- Scalability: Current MCP implementations haven’t been battle-tested at massive scale

- Security: The protocol is still evolving its security model

- Standardization: Risk of fragmentation if vendors start adding proprietary extensions

- Tool Discovery: No standardized way for LLMs to discover and understand available tools

Ecosystem Challenges

- Adoption: Requires critical mass of both tool providers and LLM clients

- Documentation: Many MCP implementations lack comprehensive documentation

- Testing: Limited testing frameworks for MCP servers and clients

- Monitoring: Observability tools haven’t caught up with MCP adoption

Possibilities (Near future)

The future of MCP looks promising with several exciting developments on the horizon:

-

Enterprise Adoption: Top products will provide MCP APIs, making services discoverable and usable by LLM applications.

-

Perception of APIs: MCP operations will align more with business-level workflows, potentially creating parallel APIs for agents.

-

Optimizing Tool Choice: Research will focus on helping LLMs dynamically and efficiently choose the right tools during conversations.

-

Marketplace Growth: An MCP marketplace is emerging, where developers and companies can monetize MCP utilities.

-

Enhanced Security: Standardized security protocols similar to OpenID are expected to develop.

-

More Optimization: Efficient techniques for tool invocation will become more common.

Conclusion

Just imagine being a user who can get everything done directly from Claude Desktop. Imagine being a SaaS platform with APIs and you spend a couple of months exposing your platform via MCP and suddenly users start accessing it via chat applications (which comes for free!).