About:

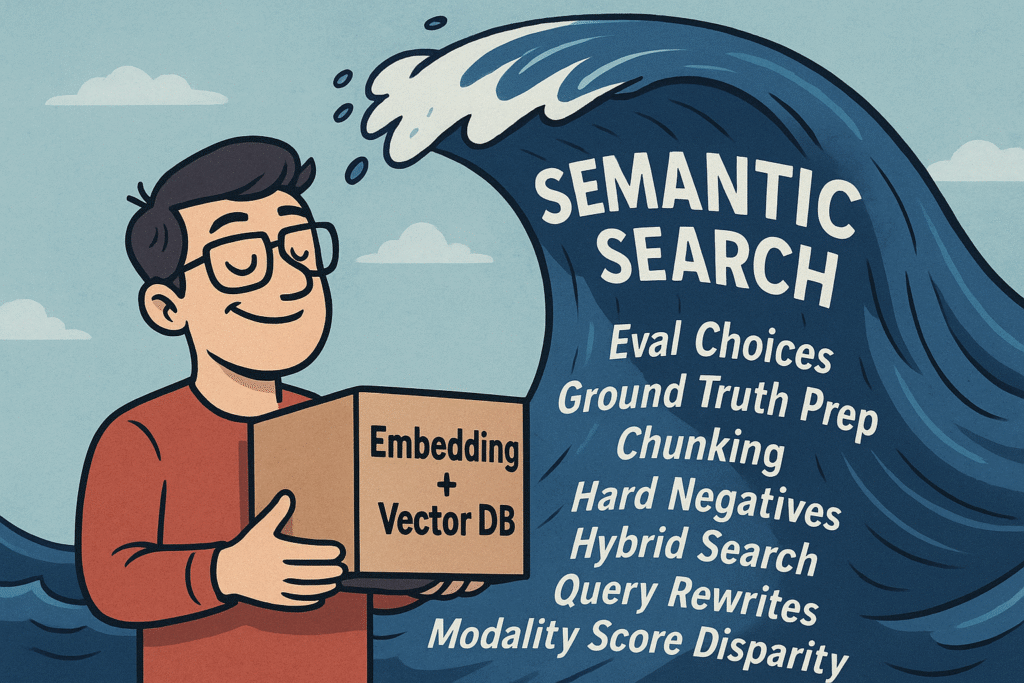

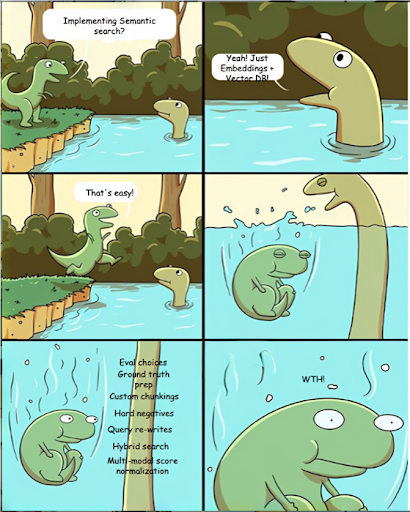

We often hear people telling us,

“Oh! semantic search was just storing embeddings and leveraging Vector DB. Can be done in a month, right?”

Well! If it’s just that, it could be done pretty shortly. But getting it to production and having it usable for enterprise users requires more than that!

This blog talks about some of the problems that you’ll have to solve to get a semantic search implementation to production for enterprise users!

Eval choices:

Eval is an important part of any AI problem and Semantic Search isn’t any exception. There is a wide variety of eval choices that you have to choose from. The first choice that you have to make is to ensure you’re choosing the right metric that meets your business user’s needs.

For example, if your user(or use case) demands that the “most relevant” result to stay on top compared to a result that is “relatively less relevant”, then probably nDCG is what you need.

If you’re fine to compromise the order of relevance and as long as all the relevant result results are ordered above the irrelevant results, then MAP should be better for you. (We’ll have a detailed blog on playbook of eval metric for semantic search later) But the point is, you have to make a call and align your feedback loop accordingly! And it’s very important to ensure that it aligns with your business needs.

Ground truth prep:

Once you’re fixed on your eval metric, then you’ll have to start preparing the ground truth for your evaluation. This would involve ensuring that,

- You’re starting with pretty much representative data of your production data.

- You’re starting with representative queries.

- You’re having appropriate way of labelling,

- Human feedback from your system.

- (And) by doing an automated labelling using LLMs.

Custom Chunking:

How you split your data (chunking) can make or break retrieval quality. And based on the data that you’re handling, the chunking would vary. And typically for a system that handles a variety of data that are served through unified search, you’ll need custom chunkings.

For example, a simple “RecursiveCharacterTextSplitter” might do good for simple texts like names and short descriptions. Whereas a subtitle would need a custom chunker of its own. Though all are text, there is “No silver bullet” that solves it all.

Hard negatives:

Hard negatives are “negative results” that are identified as “more relevant” than the actual relevant results. This would need a deeper analysis, with a simple fix might be having a “stop-word pattern” and in some cases, it might require model fine-tuning.

Hybrid search:

The Enterprise system would require you to perform “Semantic search” “in addition to” the lexical and filter based search. It wouldn’t be much of a problem if the choice of your vector DB is supportive for this. But this is a long term aspect that you should consider while making the design/architectural choice.

Query rewrites:

Not all the problems can be solved with simple “plain embeddings”. “Embeddings” works well for “semantic rich contents” which are natural language texts.

The contents that are less “semantic rich” will not be solved with just embeddings. And it would require “rewriting the content while indexing” or “query rewrite” during query.

Multi-modal similarity score disparity:

When you’re implementing systems that have to support multi-modal semantic search, you’ll have to solve the problem of disparity among different modalities.

Fact: the similarity score range is different for different modalities.

If 2 similar texts have a similarity score in the range of 0.9. A text and a corresponding image would be having a similarity score in the range of 0.3-0.4. You’ll be required to solve for this disparity. (More on this in a later blog!)

Summary

It’s true that solving semantic search is quick to put it together. But getting it done right requires meticulous effort. Do talk to us if you’re in the process of getting semantic search implemented for your enterprise.